The Growing "Contentful" Gap

You may know, if you’ve been following along, that Chrome shipped some changes to how they define “contentful” for Largest Contentful Paint—they now look at the level of entropy for an image (measured in bits per pixel) to see if it’s above a certain threshold.

What you might not realize, is that the same logic doesn’t apply yet to First Contentful Paint.

I put together a super simple demo based on a real-world approach to lazy-loading images, but with a couple small changes to make it easier to see what’s happening.

The page first loads a placeholder image—in the real-world, I’ve usually seen this as plain white, but to make it more obvious, it’s an unmissable bright pink. Then the actual image is loaded to replace it (I added a 1 second delay to make it more obvious).

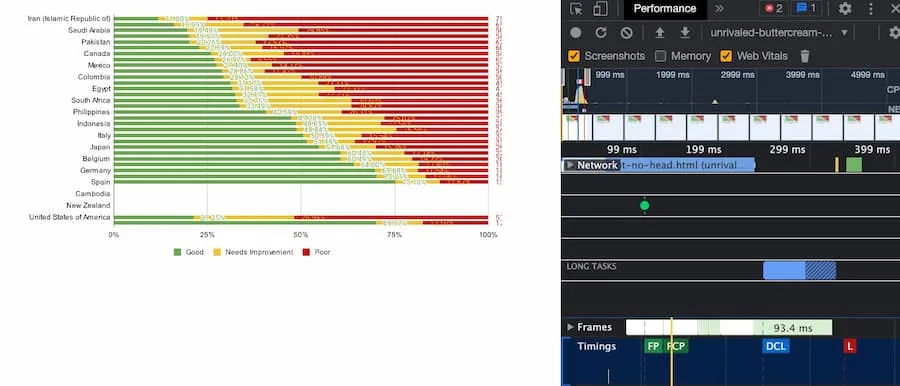

In this test result, the First Contentful Paint (FCP) fires at 99ms, and the Largest Contentful Paint (LCP) fires at 1.2s.

In Chrome DevTools, we can see the FCP metric (triggered by the placeholder image) firing much earlier than the LCP metric (triggered by the actual image that is lazy-loaded).

Here’s what the page looks like at both stages. On the left, is what it looks like at FCP—our pink placeholder is visible. On the right, is what the page looks like at LCP—the actual image has replaced the placeholder.

On the left, the placeholder image triggers the FCP metric. But because the image has a low bpp, the LCP metric isn’t triggered until the actual image loads (right).

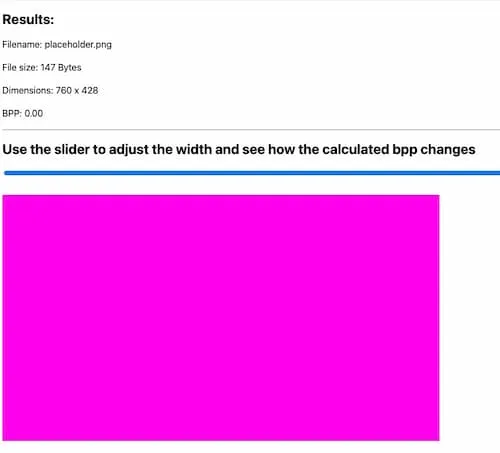

Dropping the placeholder image onto the bpp-calculator I threw together, we can see that the bpp is…well it’s tiny. I round it, but the actual number looks like it’s about .003.

The bpp for the placeholder image is tiny, well below the 0.05 threshold.

Since that’s well below the 0.05 bpp threshold that LCP now looks at, it doesn’t count as an LCP element. So even though it’s the same size as the actual image that comes in later, Chrome ignores it for its LCP measurement. (Funny enough—I actually had to compress the image to get it below the threshold. When I exported directly from Sketch, the size of the file was large enough that the bpp ended up exceeding the threshold, so the placeholder image was triggering the LCP metric).

FCP, however, doesn’t care. The definition of “contentful” there doesn’t factor in bpp, so FCP fires when that placeholder image loads.

SVG gaps

That’s not the only situation where we have a gap between what “contentful” means in one scenario versus another.

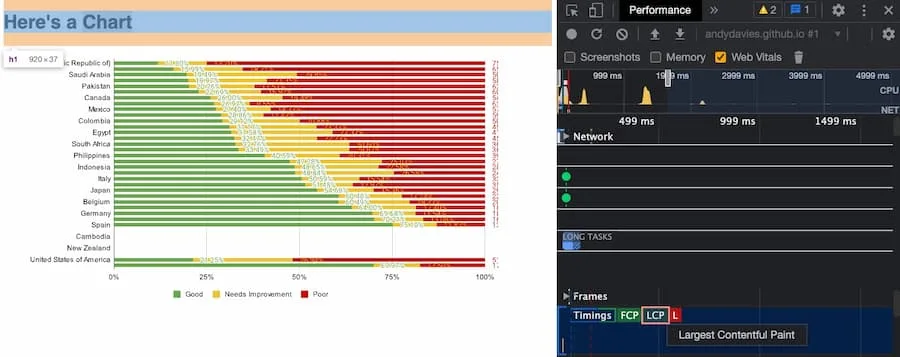

Andy Davies shared an example where two pages that look absolutely identical report two different LCP elements. In the first one, the chart is an svg element. When it comes to svg elements, LCP only considers a nested <image> as content. So in this case, it counts the h1 element as the LCP.

The chart is loaded as an svg element, which LCP does not report on. So instead, it reports the much smaller h1 element.

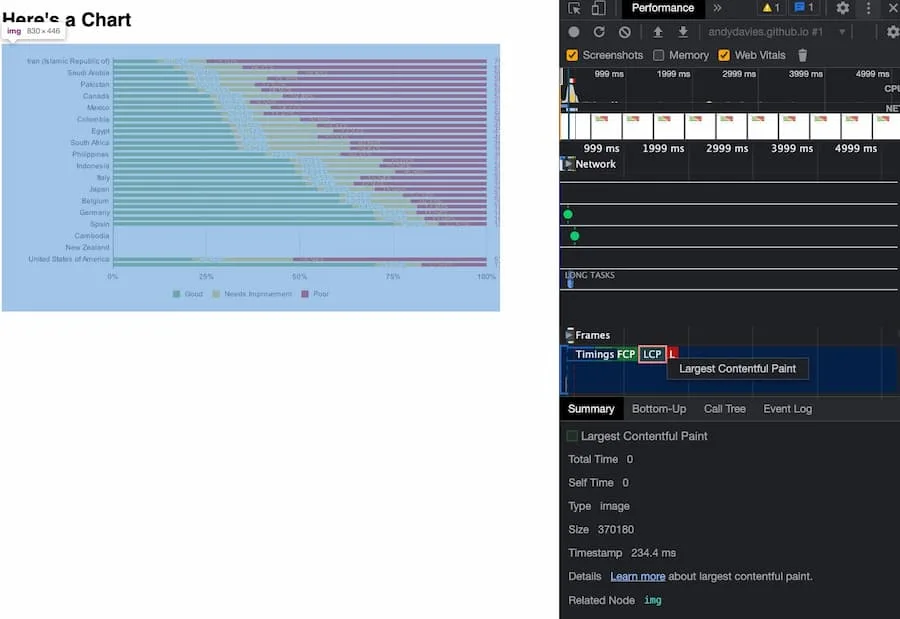

On his other page, it’s the same charge, but now it’s loaded using an img element.

<img src="images/chart.svg" width="830" height="446">As a result, Chrome reports the LCP element as the chart—not the h1 element.

Using an img element to load the chart results in the LCP metric being attribute to the image now, not the much smaller h1 element.

Again, it’s worth re-emphasizing in both situations FCP will consider the chart—whether it’s embedded or linked to externally.

We can see this more clearly if we remove the h1 altogether from the first example. Chrome reports the chart as the FCP element, but doesn’t report an LCP at all—since the embedded SVG is not considered “contentful” in the context of LCP, nothing ever triggers the metric.

With the h1 element removed, LCP won’t fire at all because it doesn’t count the SVG element as content.

Opacity too….

Another situation where there’s a gap comes into play when an element has an initial opacity of 0 and then gets animated into place.

On the Praesens site, all the text animates into place. As a result, FCP gets reported, but not LCP.

All the text starts with an opacity of zero and then animates into place, so while it triggers FCP, it never triggers LCP.

To “solve” the issue and get LCP to report, they actually ended up adding a div to the page that has some text that matches the background color (so it is never seen).

<div class="lcp" aria-hidden="true">This site performs!</div>Ok. What gives?

The reason why “contentful” has very different meaning in the context of LCP vs FCP is because while the two metrics sound very similar, they’re actually built on two different underlying specifications.

First Contentful Paint is built on top of the Paint Timing API, which has one definition of “contentful”. Largest Contentful Paint, however, is built on top the Element Timing API, which actually has no definition of “contentful”, but does have a list of elements it will expose timing for.

That feels…not ideal. It’s certainly a bit confusing and leads to situations where folks are going to be scratching their heads trying to sort out why their measurements may look off—or be missing entirely.

I assume solving this requires either making both metrics use the same underlying API, or abstracting the definition of “contentful” so that both the Paint Timing API and Element Timing API have a definition, and that definition matches across both.

Another potential solution could be to rename Largest Contentful Paint. Ironically, after all the fine-tuning Chrome as done, Largest Meaningful Paint feels most accurate based on what they’re trying to accomplish, but of course that will bring confusion with First Meaningful Paint (may it rest in peace).

I’m also really curious to see what happens when other browsers start to support LCP. Currently, it’s Chromium-based browsers only though I do know that Firefox is working on it (hopefully that means Element Timing support is coming to Firefox too!). It’ll be interesting to see how much Firefox decides to match Chrome’s heuristics around “contentful” in the LCP metric. It almost feels like they’d have to to avoid confusion when folks compare across both browsers, but that’s just speculation on my part.

Until and unless something happens to align the definition, it will be important for anyone measuring both FCP and LCP to remember that, in this case, contentful in one doesn’t equal contentful in the other, and that may lead to some odd disconnects.