Missing

I was analyzing a sites’ performance and I stumbled upon a particularly nefarious third-party widget.

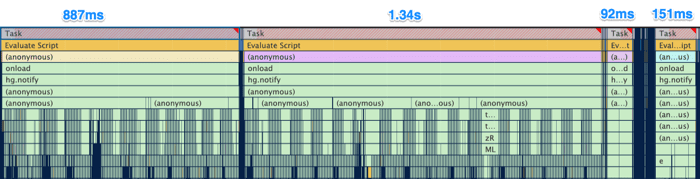

I ran a profile on my MacBook Pro (a maxed out 2018 model) in Chrome Developer Tools to see how much work it was doing on the main thread.

It wasn’t pretty.

It turns out, the widget triggers four long running tasks, one right after the other. The first task came in 887ms, followed by a 1.34s task, followed by another 92ms and, finally, a 151 ms task. That’s around 2.5 seconds where the main thread of the browser is so occupied with trying to get this widget up and running that it has no breathing room for anything else: painting, handling user interaction, etc.

2.5 seconds of consecutive long tasks is bad by any measure, but especially on such a high end desktop machine. If a souped up laptop is struggling with this code, how much worse is the experience on something with less power?

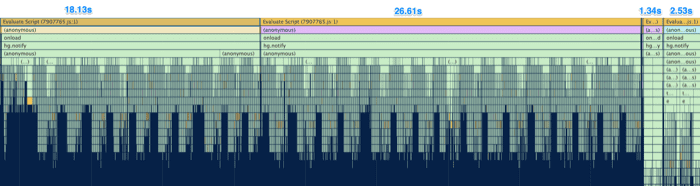

I decided to find out, so I fired up my Alcatel 1x. It’s one of my favorite Android testing devices—it’s a great stress test for sites and it lets me see what happens when circustances are less than ideal.

I knew the numbers would be worse, but I admit the magnitude was a bit more dramatic than I anticipated. That 2.5 seconds of consecutive long tasks? That ballooned into a whopping 48.6 seconds.

48.6.

That’s not a typo.

Eventually, Chrome fires a “Too much memory used” notification and gives up.

Here’s the kicker: among the things being blocked for nearly 50 seconds are all the various analytics beacons.

As Andy Davies puts it, “Site analytics only show the behaviour of those visitors who’ll tolerate the experience being delivered.” Sessions like this are extremely unlikely to ever show up in your data. Far more often than not, folks are going to jump away before analytics ever has a chance to note that they existed.

There are plenty of stories floating around about how some organization improved performance and suddenly saw an influx of traffic from places they hadn’t expected. This is why. We build an experience that is completely unsuable for them, and is completely invisible to our data. We create, what Kat Holmes calls, a “mismatch”. So we look at the data and think, “Well, we don’t get any of those low-end Android devices so I guess we don’t have to worry about that.” A self-fulfilling prophecy.

I’m a big advocate for ensuring you have robust performance monitoring in place. But just as important as analyzing what’s in the data, is considering what’s not in the data, and why that might be.