Chrome's NOSCRIPT Intervention

The other week, there were a few articles that came out about Chrome’s NOSCRIPT intervention: an intervention that would disable JavaScript altogether on slow networks. Chrome intervening on behalf of the user when it feels the network is iffy isn’t exactly new. Chrome has several interventions including one that can replace images with placeholders and one that bypasses web fonts on slow connections. The NOSCRIPT intervention itself isn’t even new. From the looks of it, it’s been around since January (just disabled by default until now).

But disabling JavaScript is a much more controversial move, it appears. Web fonts fallback very easily to system fonts so disabling web fonts is not a huge deal to most. JavaScript, however, isn’t always treated as progressive enhancement (as much as I feel it should be) and so when it goes missing, the consequences can be a bit more significant.

As you would expect, then, there’s been a lot of ensuing conversation. However, all the articles I had read were speculating on what the intervention would look like, not what it does. It took a little digging through Blink issues, but I eventually figured out how to reliably fire up the NOSCRIPT preview so that I could test it out.

What exactly does it do?

When the preview is enabled, the browser will download any necessary resources to display the page except for any JavaScript. External JavaScript files will not be requested, and inline JavaScript will not be executed. (Though it does appear that if a service worker has been installed for the domain, it will still execute).

The browser will do all the rest of the work necessary to display the page and present it to the user, with an information bar informing the user that the page has been modified to save data and giving them the option to view the “original”. When the click on the information bar, the original page will be downloaded and displayed—JavaScript included.

When I first read about the intervention, I had thought the preview was some sort of static snapshot, but it’s fully interactive. Provided your site works without JavaScript, I can click from page to page, reading articles or shopping for the product I want to buy.

Taking it for a test drive

To test the intervention, you’ll need to toggle a few flags to make sure you can see the NOSCRIPT preview. Once it’s enabled by default on Android, which presumably will happen in Chrome 69, this won’t be necessary.

To toggle the flags, open Chrome on your Android device and navigate to chrome://flags. #allow-previews and #enable-noscript-previews must each be enabled. #enable-optimization-hints should be disabled (we’ll come back to that later). You’ll also need to set the #force-effective-connection-type flag to ‘2G’ or slower.

When does it kick in?

The intervention kicks in when two criteria are met (it’s a bit more complicated than that, but we’ll get to that in a minute):

- The effective network connection type is 2G or slower

- The Data Saver proxy is enabled

If you want to see the intervention in action, you’ll need to make sure Data Saver is running (Chrome > Settings > Data Saver).

In real use, Chrome will use the Network Information API to determine if the effective connection type (ECT) is 2G or slower and, if it is, use the NOSCRIPT intervention. For testing purposes, you can force Chrome to always view the ECT as a 2G network using the #force-effective-connection-type flag I mentioned earlier.

On the surface, the decision to apply the intervention seems straightforward. If the network is slow and the user has made the decision to get let Chrome help them out in those situations, you’ll get the NOSCRIPT intervention. The reality is it’s a little more complex than that.

For one, there is a whitelist and blacklist that can opt domains in or out of this optimization. It appears that there are lists on the browser side as well as on the user side. I’m not clear on all the ways those lists can be populated, but it does look like if the user opts out of the same host often enough, the host will be added to the preview blacklist. There is also a short period (about 5 seconds from the looks of it) where Chrome will decide not to use the intervention from any site if a user has recently opted out.

Another wrinkle is that the NOSCRIPT intervention is far from the only option Data Saver has to reduce page bloat. There are other optimizations, and even other previews (like the LOFI preview which will load image placeholders instead of actual images). Again, I’m not 100% certain about the logic they’re using to determine when a given preview is the correct option, but it does appear there’s some thought applied here: they’re not just applying the NOSCRIPT intervention to every page that comes along.

That’s where the #enable-optimization-hints flag I mentioned earlier comes in. Enabled by default, this flag enables Chrome to use “hints” to determine when and where certain optimizations should apply. Right now, to apply the NOSCRIPT intervention with optimization hints enabled, the request must be whitelisted. I suspect they may get more aggressive with the optimization after they’ve had it running like this for awhile. In the meantime, to consistently see it in effect, we need to disable those hints.

So yes, it does kick in on 2G networks with Data Saver enabled, but as you can see, there are more variables at play.

It works on HTTPS too

Before testing, I made the (mistaken) assumption that since the NOSCRIPT preview intervention was tied to Data Saver, it wouldn’t apply to HTTPS sites. Data Saver, like most proxy browsers and services, tends to leave HTTPS alone. But it looks like I was wrong: the NOSCRIPT intervention appears to work on both HTTP and HTTPS sites.

I guess it makes sense. The reason Data Saver (and other proxy services and browsers) leave HTTPS alone is that applying any transformations to the content would require that they essentially act as a man-in-the-middle.

In this case, however, they aren’t transforming the content in any way. The NOSCRIPT previews simply don’t execute JavaScript, nor make any requests to external JavaScript.

How do developers know the intervention has been applied?

When the intervention kicks in, all requests will have an intervention header applied to them, like so:

<https://www.chromestatus.com/features/4775088607985664>; level="warning"

The presence of the header is enough to indicate that the browser applied some sort of intervention, and the URL in the header will point to more information about the specific intervention applied.

There’s one notable exception: the main document does not appear to get the intervention header currently. Honestly, this may just be a bug as it’s not clear to me why the header wouldn’t be applied to the main document.

All requests (including the main document) will also have the save-data header set to on, but you shouldn’t rely on that as an indication of an intervention. The save-data header will be applied whenever the proxy is enabled (or, really, any proxy service or browser that supports the header), regardless of whether the browser applied any interventions.

If you’re actively testing, you can also fire up chrome://interventions-internals/ in Chrome on your device and follow the logs to confirm when the NOSCRIPT intervention has been applied.

What does this mean for users?

For users, the intervention can be very effective for certain sites. I loaded up 10 different sites with the NOSCRIPT intervention enabled and disabled to see the difference.

| URL | NOSCRIPT Weight (KB) | Original Weight (KB) | Change in Weight |

|---|---|---|---|

| https://www.wayfair.com/ | 164 | 3277 | -95.0% |

| https://www.aliexpress.com/ | 72 | 2150 | -96.6% |

| https://www.linkedin.com/ | 151 | 1536 | -90.2% |

| https://www.reddit.com/ | 295 | 1126 | -73.8% |

| https://www.bbc.com/news | 354 | 467 | -24.2% |

| https://www.theatlantic.com/ | 11673 | 2970 | +293.0% |

| https://techcrunch.com/ | 548 | 2867 | -80.9% |

| https://www.theverge.com/ | 68198 | 3174 | +2048.4% |

| https://www.cnn.com/ | 418 | 7784 | -94.6% |

| https://www.nytimes.com/ | 379 | 16650 | -97.7% |

The two results that jump out right away as oddities are The Atlantic and The Verge which managed to get a whopping 293% and 2048% heavier without JavaScript. In case you’re curious (I was), it’s because they are doing a lot of lazy-loading of images with JavaScript. In situations where JavaScript is not available, they wrap a fallback image in a <noscript> element. Unfortunately for visitors to both sites, several fallback images are massive—ranging from 1.6MB to 9.9MB.

When the optimization works, which is more often than not, it works very well. The minimal improvement was a 24% reduction in data usage, and the remaining sites shed between 74-98% of their bytes.

It’s possible you would get similar results from the LOFI preview (which displays placeholders instead of the site’s actual images by default) for many of these sites. It’s worth noting though that the NOSCRIPT intervention has the added benefit of reducing the amount of work the actual device has to do. Images may account for the majority of network weight, but on the CPU, JavaScript is the worst offender by far.

What does this mean for site owners?

Whenever something like this comes up, naturally people want to know how to make it so that their site isn’t negatively impacted. The appropriate response is to make sure you serve a usable experience even if JavaScript isn’t enabled. That doesn’t mean you can’t use React, Vue or the like—but it does mean you should use server-side rendering if you do. The less your site relies on client-side JavaScript, the better it will appear when the intervention is applied. Treat JavaScript as an enhancement and you’re good to go.

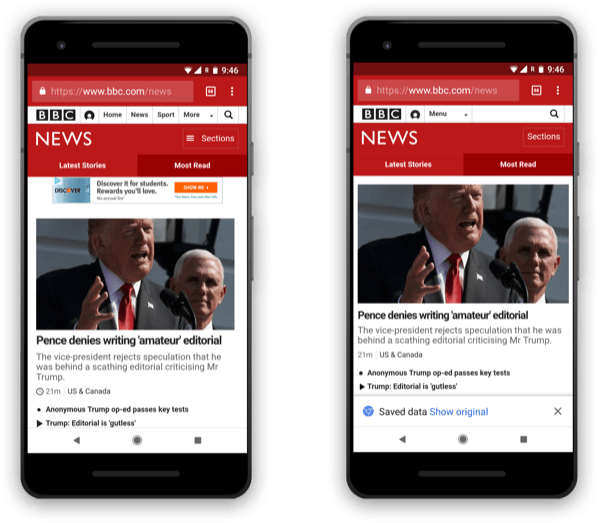

The BBC site is a good example. Below you can see the mobile site (left) and the NOSCRIPT preview (right). There is very little difference. The branding is retained, and the content is readable.

The BBC site is a great example of how good the NOSCRIPT preview can look when JavaScript is treated as progressive enhancement: all the content and branding is in place.

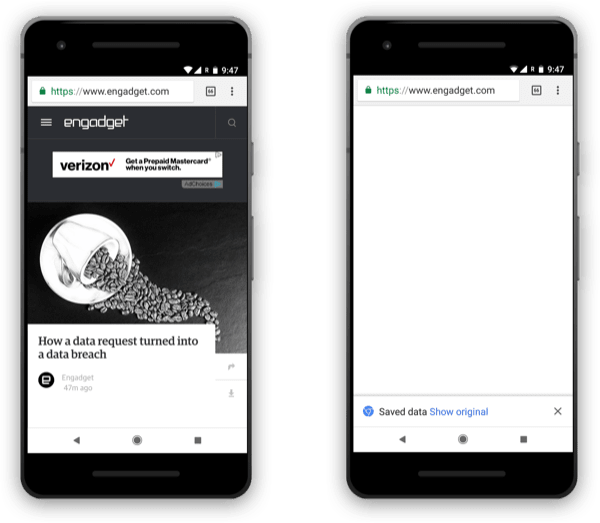

Contrast that with the Engadget site, which displays nothing whatsoever in the NOSCRIPT preview:

Since Engadget requires JavaScript to display their content, the NOSCRIPT preview is blank.

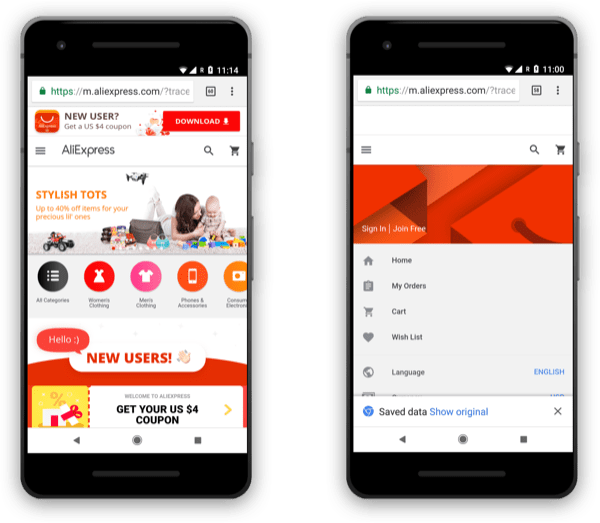

Or AliExpress which does have some navigation displayed (kind of), but no branding:

AliExpress.com shows at least a little navigation in the NOSCRIPT preview, but there’s no branding without JavaScript enabled.

You can, technically, opt-out of the intervention altogether by setting Cache-control: no-transform on your main request. The no-transform value tells proxy services not to modify any requests or resources and the intervention respects that: applying it ensures no one will ever see a NOSCRIPT preview for your site.

But use this with extreme caution. I’ve always been incredibly uneasy about using the no-transform value to opt out of proxies. Users are choosing those proxy services or browsers intentionally. They’re opting into these sort of optimizations and interventions and it feels a bit uncomfortable to me when developers overrule those decisions.

If you are going to opt-out using the no-transform value, then at least make sure you’re making ample use of the save-data header to reduce weight wherever you can: eliminating web fonts, serving low-quality images, etc.

This is a good thing

Long story short, the NOSCRIPT intervention looks like a really great feature for users. More often than not it provides significant reduction in data usage, not to mention the reduction in CPU time—no small thing for the many, many people running affordable, low-powered devices.

The Chrome folks, as you would expect, aren’t being haphazard with the intervention either. In fact, by (at least initially) relying on a whitelist, they’re being pretty conservative with it. It’s just one of many tricks in their bag to provide a more performant experience and they appear to be treading carefully when it comes to applying it.

What I love most about the intervention is the attention it has gotten from developers. JavaScript isn’t a given. Things go wrong.

I have mixed feelings about Google’s influence on the web (a subject for another post, perhaps) but bringing a little more attention to the reality that we can’t always rely on JavaScript (and providing a much more usable experience for many in the process) is something I can get behind.